The Department of Earth & Planetary Sciences maintains two separate high performance Linux clusters as well as a number of standalone dedicated Linux servers for scientific research.

Our latest acquisition (delivered early 2017) is a 576 core cluster from Aspen Systems consisting of 24 nodes, each with two 12 core processors and 128GB of RAM. The cluster includes 30TB of shared attached storage, all interconnected with an Infiniband switch. The cluster has a full suite of compilers installed, including open source GNU compilers and optimized Intel compilers, as well as MATLAB and other scientific applications.

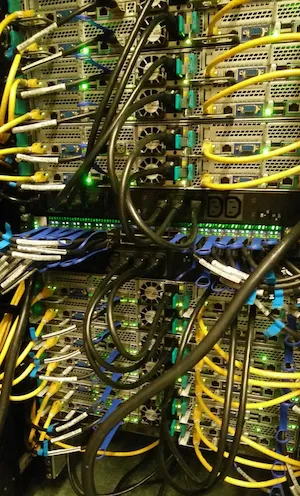

The seismology group has a farm of three Dell Linux servers, sharing a total of over 120TB of networked disk space managed by two separate RAID controllers. The Dell servers include one PowerEdge R900 with 24 cores and 128GB of RAM, and two PowerEdge R920 servers, both with 24 cores each (48 hyperthreads.) One R920 has 128GB of RAM and the other has 256GB. These Dell servers all have MATLAB and a suite of geophysical software dedicated for the analysis of seismic data.

We are still maintaining our older but still operational Beowulf cluster first brought online in 2005. The original cluster had 48 nodes and as of Fall 2017 there are still 34 nodes in operation. In comparison, each of those original nodes had a single 2GHz 64 bit AMD core and 1GB of RAM (although now several nodes have been upgraded to 2GB of RAM from the RAM of non-functional nodes.) This cluster was built as a farm of single socket tower servers from Aspen Systems interconnected with a standard 1GB ethernet switch. This cluster was primarily used to run CitCom finite element analysis.